Gemini AI for Kids: Why Parents Must Slam the Brakes

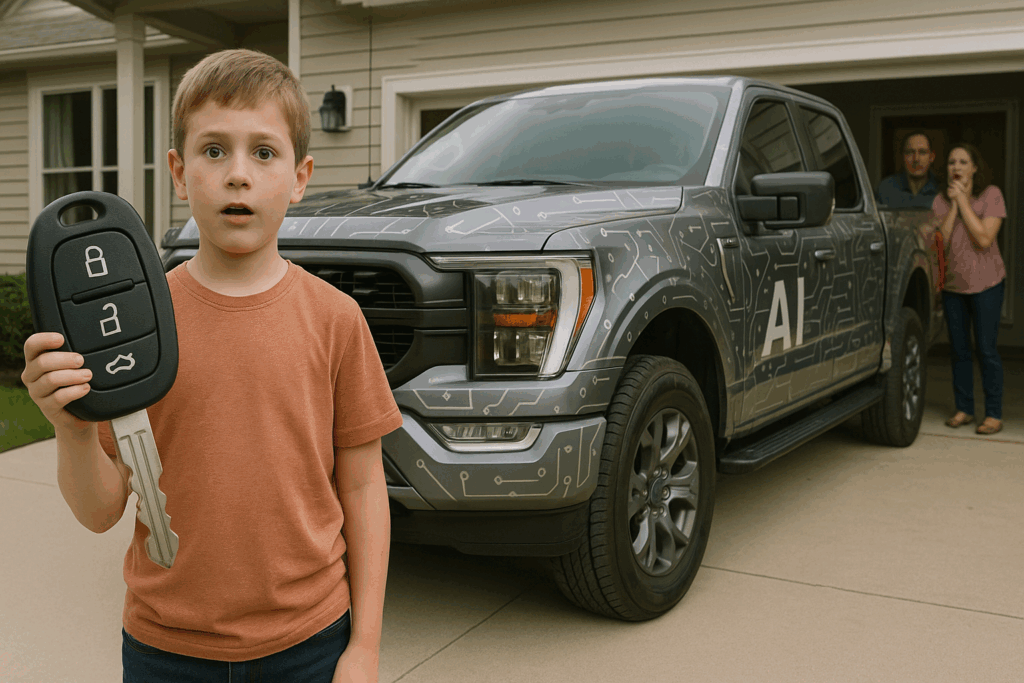

Google effectively just handed preteens the keys to a digital Ford F-150 and told parents it’s their responsibility to keep their kids safe.

In an email to parents last week, as reported in The New York Times and The Verge, Google informed parents that children under the age of 13 will soon have access to its Gemini AI chatbot. While acknowledging Gemini makes mistakes, Google urges parents to help their child “think critically about Gemini responses.”

Handing Gemini, a digital dynamo, to a preteen is like tossing a ten‑year‑old the keys to a two-and-a-half ton pickup truck and wishing them well. A t 35 mph, that truck packs enough kinetic energy to flatten a Prius.

Yet Google wants parents to trust that a few “guardrails” will keep its AI chatbot from spewing misinformation or perilously taking their children into very dangerous territory. When society’s traffic‑safety playbook screams for training, supervision, and age minimums, AI should be no different.

Let’s Think Critically About This

As I have written before, I have a problem with Google.

Every person who uses those Chrome, Gmail, and its online search can opt out of Google using its behavioral data or tracking it across the web, but most don’t.

In its announcement to parents, Google took a similar tack. Gemini availability will be on as a default for preteen accounts managed through its Family Link program, but parents can disable it.

Has Gemini Screwed Up?

Yes. Gemini makes mistakes.

In late 2024, Gemini told a 29-year-old grad student to, “Please die.” At the time, Google said the response violated its policies – gee, good to know – and they had taken steps to keep it from happening again, but Gemini’s latest model is performing worse on safety.

With that in mind, no parent should let a young child use Gemini or any AI chatbot unsupervised. And you don’t have to take my word for that.

The Experts Weigh In

No social AI companion should be used by anyone under the age of 18, according to Common Sense Media. Its assessments concluded safety measures are easily foiled, harmful stereotypes take little to provoke, and AI companions “routinely claimed to be real.”

In an article on generative AI’s risks and opportunities for children, UNICEF wrote:

Generative AI can instantly create text-based disinformation that is indistinguishable from, and more persuasive in swaying people’s opinion than, human-generated content. In a test by the Center for Countering Digital Hate, Google’s Bard generated misinformation without disclaimers when prompted on 78 out of 100 false and potentially harmful narratives, including climate, vaccines, LGBTQ+ hate and sexism.

Don’t Worry. Chat Happy.

According to Google’s email to parents, there’s no need to worry. Google has them covered.

Google cautioned parents to:

- Show [your child] how to double-check responses

- Let [your child] know not to enter sensitive or personal info in Gemini

- Our filters try to limit access to inappropriate content, but they’re not perfect. [Your child] may encounter content you don’t want them to see

- Google Assistant Parental Controls will remain in Family Link and will continue to apply to Google Assistant devices in the home but will not apply to Gemini

You read that right. Google told parents to train their children under 13 years of age how to fact check AI responses and admitted the kids may encounter stuff parents don’t want them to see.

Yes, verifying AI responses is a great skill we should all develop. Perhaps Google is doing this to foster broader understanding of AI and help prepare youth for the future.

Maybe, but for Google to unleash the Gemini AI chatbot on preteens after a single email to parents is irresponsible and egregious. And while Google says it won’t train on preteen data, that’s a leap of faith parents should not have to take.

What Should Happen?

Parents who use Google Family Link should disable Gemini access for their preteens. The risks are too great, and most U.S. adults agree. In a January 2025 YouGov poll, 51% of respondents had either “Not much confidence” or “No confidence at all” that technology companies developing AI would do so responsibly.

If the parent doesn’t use AI themselves, they owe it to themselves and their future employment prospects to get up to speed on what all the hubbub is about. Here are two introductory courses parents can work through with their kids:

- How AI Works and Exploring Generative AI: short 45-minute video series from Code.org

- Generative AI for Kids, Parents, and Teachers: a free five-hour Vanderbilt University Course with hands‑on challenges you can try in real time.

Policy makers and child advocates should demand a genuine, independently tested and validated kid-safe mode for AI. No PR videos; just real experts protecting kids.

I will call my legislators and advocate they take a stand to protect kids and help parents. One call may not turn the tide, but the issue it too important to sit on the sidelines.

Google should open Gemini for Kids to outside, third-party red team evaluations. And it should commit to publishing the results regardless of what is found.

Only with true transparency can we be sure kids will be safe using these state of the art technologies.

Until that happens, the only lesson we need to learn is that, when a trillion‑dollar company tells us to trust it, we say, “Hell, no.”