Gemini Didn’t Cite a Single Source. Is This Normal?

Many people, including me, have written much lately about Google search.

Briefly, they feel Google no longer wants to connect a user to a website other than Google. Through its use of AI Overviews to provide a narrative answer on the search results page, queries can often be satisfied without clicking to a source site.

The issue is of utmost importance for publishers and other businesses who rely on search referral traffic. Entire industries rely to a great degree on the clicks they get from Google search results.

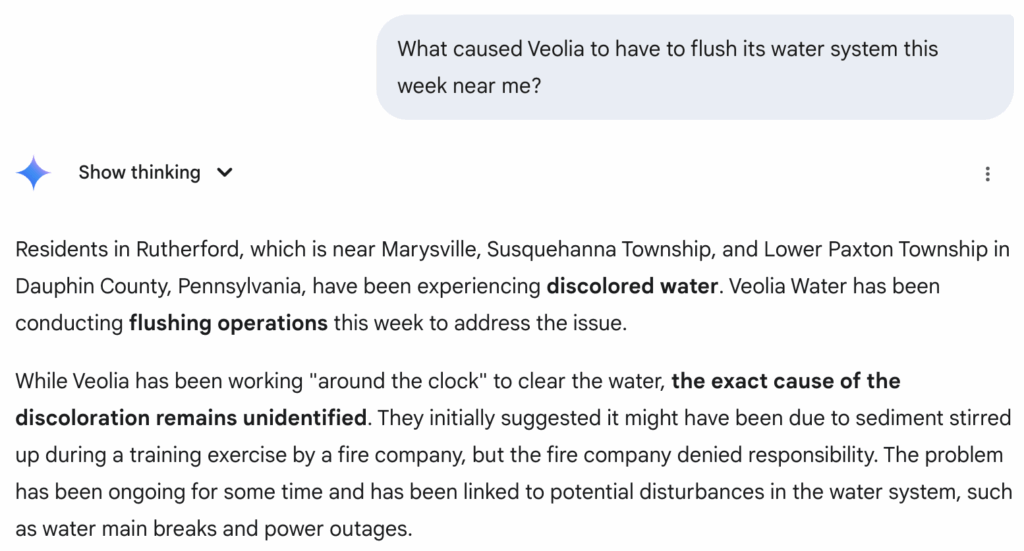

While I had kept up with the developments, they didn’t shock my system until I entered a simple query in Gemini, Google’s Generative Artificial Intelligence chatbot, this weekend.

A local water system made headlines with some cloudy water and requests for customers to flush their taps with cold water. I wanted to know if the company had determined what caused the issue.

Because I’m trying to use Gemini more just to get a sense for its capabilities compared to other AI models, I opened the app and typed one sentence:

I read the text, shared it with my girlfriend, then stopped, stunned.

No sources. Gemini generated two paragraphs of text with no sources. None.

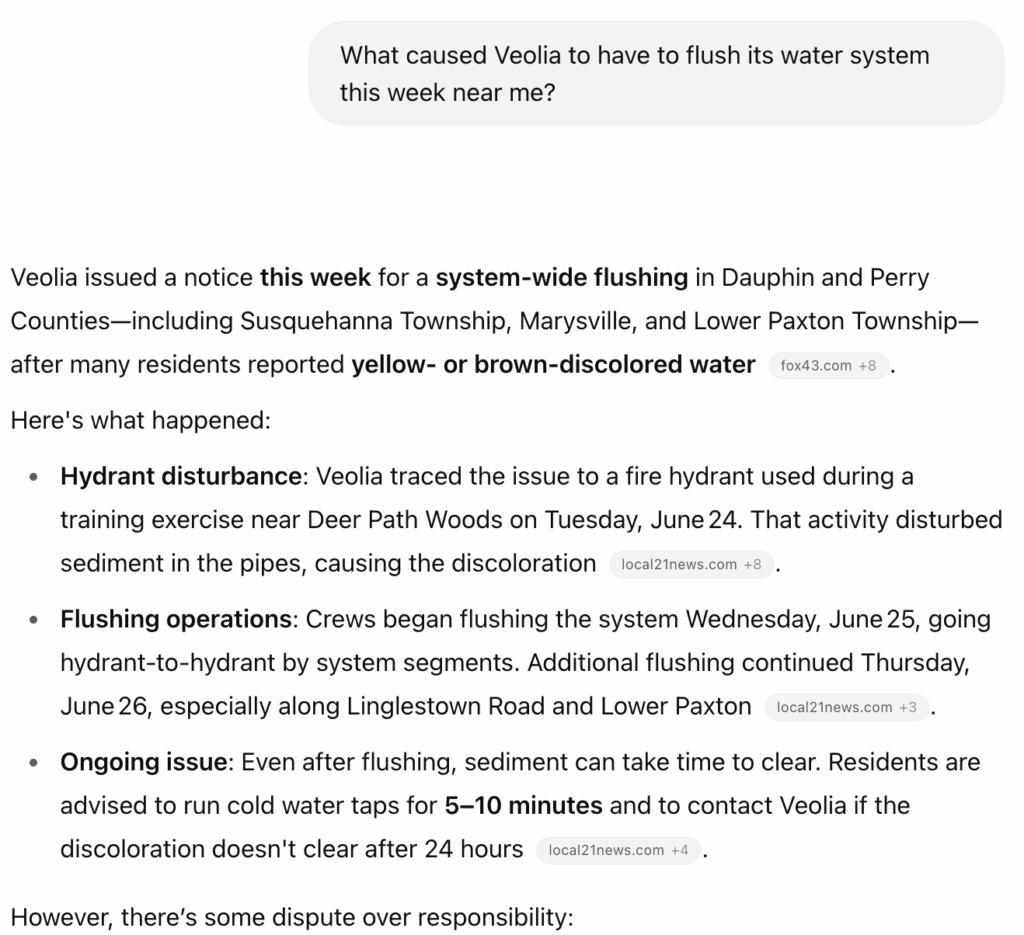

That seemed really odd, so I entered the exact same prompt in ChatGPT:

It provided a pretty good response peppered with sources which is exactly what Gemini should have provided. And although ChatGPT did misspeak in the final section when it repeated the water company’s disputed reason for the issue, it stood digital head and shoulders above Gemini in terms of giving me what I’d need to validate its generated answer.

Do You Validate?

When you have a conversation with generative AI, you must check its work. It’s basic AI literacy.

Even though models have become more powerful and hallucinate less, they are trained on the entirety of the public – and often copyrighted – internet. And as we all know, not everything on the internet is accurate.

So any time you use AI, you have to “check its math” which means validating that the source material is legitimate. There’s no way around it right now.

Is This A One Off?

Do you use Gemini?

If you do, have you noticed it not providing sources in its results?

Please let me know. I am very curious if this is just me, or if it is something Google is experimenting with to see if they can not provide sources and people won’t be bothered by it.

If in fact Gemini queries frequently fail to include sources, there really is something to worry about for industries which rely on search referral traffic. The inevitable disruption from AI would accelerate in ways many haven’t anticipated.

As for me, I do most of my personal AI searches with Perplexity and ChatGPT, but I will weave Gemini into the mix in the coming weeks to see just how often it doesn’t provide sources.

To that end, I ran two other queries while preparing this article. One asked for gardening advice, and it returned a result chock full of sources.

The final query, though, returned five results without a single hyperlink. I had asked Gemini to find articles in which Google had been criticized for wanting to keep traffic on its site instead of connecting users to other web sites.

The links I used in the article? They came from ChatGPT with the exact same prompt I entered into Gemini.

No bueno.

Photo by Rinald Rolle on Unsplash